Deep learning is a form of machine learning that makes computers remarkably adept at detecting and anticipating patterns in data. Because that data can come in many forms, whether it’s audio, video, numbers in a spreadsheet, or the appearance of pedestrians in a street, deep learning is versatile. It can speed up data-intensive applications, like the design of custom molecules for medicine and new materials. And it can open up new applications, like the ability to analyze how minute changes in the activity of your genes affect your health.

To understand why it’s called “deep” learning and why it has sparked a resurgence of hope for artificial intelligence, you have to go back to the 1940s and 1950s. Even though computers at the time were essentially giant calculators, computing pioneers were already imagining that machines might someday think—which is to say they might reason for themselves. And one obvious way to try to make that happen was to mimic the human brain as much as possible.

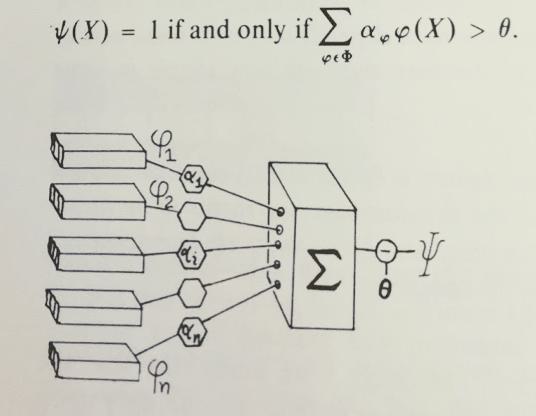

That’s the idea behind “neural networks.” They’re loose approximations of physical neurons and how they are organized. Originally, computer engineers hard-wired them into big machines, and today these neurons are simulated in software. But in either case they’re designed to take an input (for example, all the pixels that make up a digital image)‚ and generate a meaningful output (say, an identification of the image). What determines the output? The behavior of simulated neurons in the network. Much as a physical neuron fires an electrical signal in response to a stimulus and triggers the firing of other brain cells, every simulated neuron in an artificial neural network has a mathematical value that affects the values of other simulated neurons.

Imagine you want to have a computer distinguish between horses and zebras. Every time you vary the input—say you put up an image of a creature with black and white stripes instead of brown hair—that changes the mathematical values of the simulated neurons in the first layers, the ones that analyze the most general features of an image. These changes in value cascade through the network, all the way out to the ones in the top-most layer, the ones that confirm for the machine that it should identify the animal as a zebra. If it fails to do so accurately, you can train the system by adjusting the mathematical values of key nodes in the network. (This method is known as supervised learning; there’s a cool twist on it that we’ll get into a little bit later.)

For decades, the utility of neural networks was mainly theoretical. Computers lacked the processing power to use it in a wide variety of data-intensive tasks. But that has changed dramatically in the past decade, thanks mainly to the advancement of Graphics Processing Units (GPUs) developed for rendering realistic video games (of all things!). Now neural networks can handle a great deal of data at once and cascade it mathematically through layers upon layers of simulated neurons. These ever-more complex networks are “deep” rather than shallow, which is why the process of training them to work is known as deep learning.

The reason we care about these added layers of depth is that they make computers much more finely attuned to small signals—some meaningful patterns—in a blur of data. So, what does that mean in practice? You can think of three major categories of applications: computer perception, computer analysis, and computer creation. These classes of applications—and combinations of them—are providing the foundation for entirely new scientific practices and technologies.

In the first category, you’ve probably already seen a big change in computers’ ability to perceive the world. Deep learning is why digital assistants like Siri and Alexa consistently interpret your spoken commands. Even though they still can’t always deliver the information or perform the task you’d like, they transform the sound of your speech into text with impressive accuracy. This is how computers are identifying cancer from radiology images, and sometimes doing it more reliably than human doctors can. Deep learning also helps self-driving cars process data from the roads—although the slow rollout of automated vehicles should serve as a reminder that this approach isn’t magical and can only do so much.

In the second category, computer analysis: as deep learning algorithms have gotten better at finding signals in data, machines have been able to tackle more sophisticated problems that are impossible for humans because they involve so much information. Medicine is a prime example. Some researchers are using deep-learning algorithms to reanalyze data from past experiments, looking for correlations they missed the first time. Say a drug failed in trials because it worked on only 10 percent of a study’s participants. Did those 10 percent have something meaningful in common? A deep-learning system might spot it. The technology also is being used to model how engineered molecules will behave in the body or in the environment. If a computer can spot patterns that indicate the molecules are probably toxic, that reduces the chances that researchers will waste precious time and money on trials in animals.

Meanwhile, some startup companies are using deep learning to analyze minuscule changes in images or videos of living cells that the naked eye would miss. Other companies are combining various types of medical information—genomic readouts, data from electronic health records, and even models of the mechanisms of certain diseases—to look for new correlations to investigate.

The final category, computer creation, is booming because of two intriguing refinements to deep learning: reinforcement learning and generative adversarial networks, or GANs.

Remember the horse vs. zebra detector that had to be “supervised” in its learning by adjusting the mathematical values of the neural network? With reinforcement learning, programmers do something different. They give a computer a score for its performance on a training task, and it adjusts its own behavior until it maximizes the score. This is how computers have gotten astonishingly good at video games and games like Go. When IBM’s Deep Blue mastered chess two decades ago, it essentially was calculating all the possible moves that would exist after any given move. Such brute force doesn’t work with the bewildering array of possible moves that exist in Go, let alone many real-world problems. Instead, computer scientists used reinforcement learning. They let the computer see for itself whether various patterns of play led to wins or losses.

Now imagine you’re a biomedical researcher who wants to create a protein to attack disease in the body. Rather than getting a reinforcement learning system to optimize its behavior for the highest score in a game, you might optimize a protein-design function to favor the simplest atomic structure. Similarly, manufacturers with networks of machinery can use reinforcement learning to control the performance of individual pieces of equipment so as to favor a factory’s overall efficiency.

Generative adversarial networks bring together all the ideas you’ve just read about. They pit two deep learning networks against each other. One of them tries to create some data from scratch, and the other one evaluates whether the result is realistic. Say you want a totally new design for a chair. One neural network can be told to randomly combine aspects of chairs: various materials, curves, and so on. The other neural network performs a simple evaluation: is that a chair or not? Most of what the first neural network comes up with will be nonsense. But the other neural network will reject those, leaving behind only chairs that look real, despite not having ever existed in reality. Now you have a plausible set of new chair designs to tinker with. These same approaches can be used to design new drug molecules with a higher likelihood of success.

GANs can have creepy implications, like when they generate fake photos, audio or video that feels utterly real. But the best way to think of them is as another machine-learning tool—one of several reasons that a technology that has been developing for decades is getting more efficient—and likelier to unlock new discoveries.

Many of Flagship Pioneering’s companies use machine learning techniques to develop new drug discovery platforms, including a number of our most recent prototype companies, and NewCos such as Cogen, which is developing a platform to control the immune system’s response to treat cancer, autoimmune diseases, and chronic infections.